Locking Down Blog Site

- 3 minutes read - 572 wordsSecuring Static Website

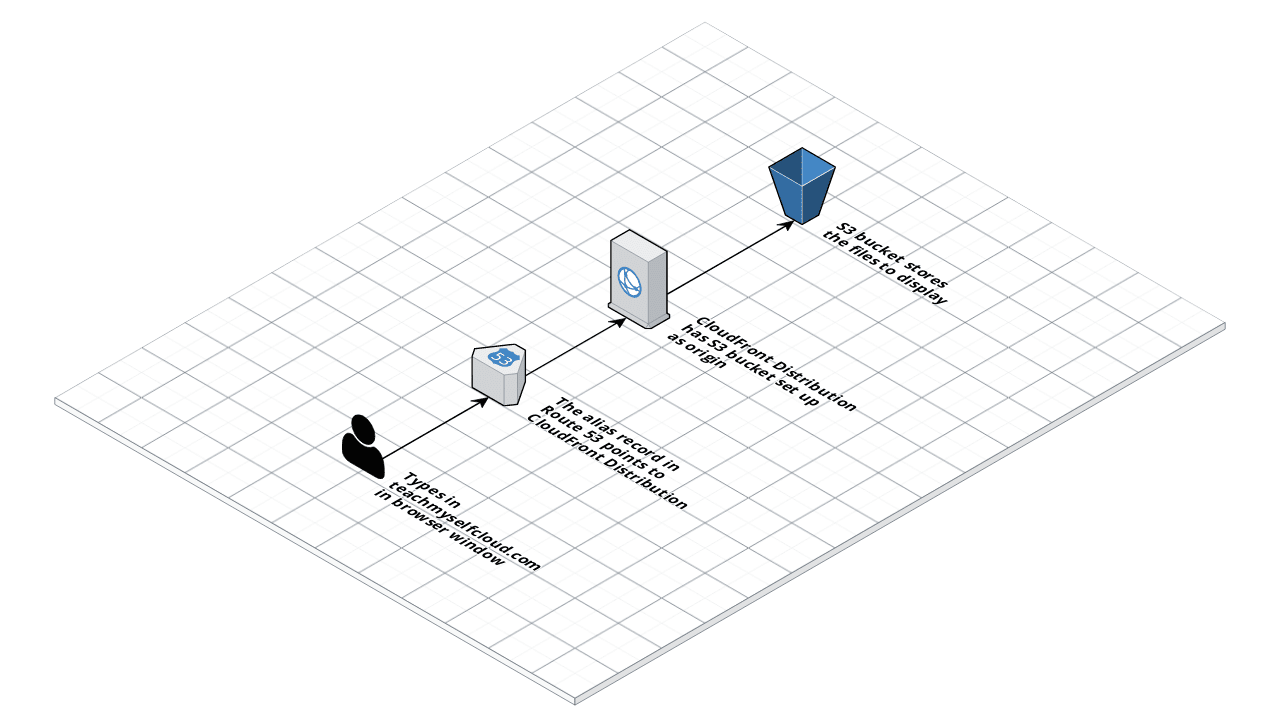

In a previous blog, I wrote about setting up this website. It is a simple static site with a basic architecture as shown below:

The teachmyselfcloud domain is registered with Route 53. This automatically creates a hosted zone, with a NS record and a start of authority SOA record. I created an alias A record that points to the CloudFront distribution, that sits in front of the S3 bucket that holds the individual objects.

The CloudFront distribution is configured to use the custom domain name, and uses an SSL certificate imported from AWS Certificate Manager. It is also setup to redirect all HTTP requests to HTTPS.

The issue was that the S3 bucket, as a public facing site, allowed anyone to access the objects, and it still allowed viewers to access the website directly insecurely using the S3 endpoint.

Enforce use of HTTPS

The easiest way to ensure prevent insecure access was to add a bucket policy prevent access over http. I added the following policy to the bucket:

{

"Version": "2012-10-17",

"Id": "EnforceHTTPSOnly",

"Statement": [

{

"Sid": "Enforce that files are only accessed over HTTPS",

"Effect": "Deny",

"Principal": "*",

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::{bucket}/*",

"Condition": {

"Bool": {

"aws:SecureTransport": "false"

}

}

}

]

}

When I then tried to access the http endpoint I got back a 403 Forbidden error.

Enforce access via CloudFront

The first option above worked in enforcing secure access, but it still allowed users to access the objects directly. The next upgrade was to enforce that users can only access the site via the CloudFront distribution.

This can be done by amending the origin settings to use an Origin Access Identity. This is a special CloudFront user that you can use to give CloudFront access to an S3 bucket.

This also meant amending the bucket permissions. I chose to do this directly by applying the following policy:

{

"Version": "2012-10-17",

"Id": "PolicyForCloudFrontPrivateContent",

"Statement": [

{

"Sid": " Grant a CloudFront Origin Identity access to support private content",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::cloudfront:user/CloudFront Origin Access Identity {ID}"

},

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::{bucket}/*"

}

]

}

This now meant, then anytime I tried to access an object directly from S3, I got the following error:

<Error>

<Code>AccessDenied</Code>

<Message>Access Denied</Message>

<RequestId>4C39DD9DA0EB58D9</RequestId>

<HostId>...</HostId>

</Error>

Restrict allowed IP addresses

Another common problem you may have, is to restrict access to a specific IP address or a range of IP addresses. Rather than using a bucket policy, I took at look at AWS WAF.

The way to achieve this is using a Web ACL that gives you fine grained control over web requests and you can allow or block the following types of requests:

- Originate from an IP address or a range of IP addresses

- Originate from a specific country or countries

- Contain a specified string or match a regular expression (regex) pattern in a particular part of requests

- Exceed a specified length

- Appear to contain malicious SQL code (known as SQL injection)

- Appear to contain malicious scripts (known as cross-site scripting)

When creating a new Web ACL, you first off specify the AWS resource to associate it with. This is the CloudFront distribution. Then I created an IP match condition, specifying the addresses or ranges I wanted to support. After this, I created a new Rule, adding the condition. The rule essentially allows requests from specific IP addresses, and then blocks all requests that don’t match any rules.