Testing improvements to Lambda VPC Networking

- 3 minutes read - 542 wordsBackground

Yesterday, Chris Munns updated a blog post stating that the Lambda VPC networking improvements have been fully rolled out to the US East (Ohio), EU (Frankfurt), and Asia Pacific (Tokyo) regions. This gave me a chance to run a simple test to see if I could notice any reduction in latency.

The Setup

To setup the test, I created a quick project to deploy a lambda function associated with a VPC, and one that was not. You can find the project here if you want to run it yourself.

The project used the Serverless Framework to create all the infrastructure required. To keep everything to a minimum, the project created a VPC, a single public subnet, and a security group. Alongside the two lambda functions, I also created an API Gateway to provide an http endpoint, and enabled X-Ray tracing.

Deployment

Deploying the functions is straightforward. The provider section of the serverless.yml file includes the following line

region: ${opt:region, 'eu-central-1'}

This means running the following command will deploy everything required into the EU (Frankfurt) region:

sls deploy

It’s important to make a note of the two URL’s returned for testing. I also stood up the stack in the EU (Ireland) region by using the following command:

sls deploy --region=eu-west-2

Testing

I ran the tests using Artillery

This can be installed using the following command:

npm install -g artillery

Once installed and the demo deployed, you can test using the following command:

artillery quick --count 50 -n 10 {URL}

The count creates the specified number of “virtual users”, each of which send the -n number of HTTP GET requests to the URL.

Results

The results of testing the VPC attached lambda in the EU (Ireland) region where the optimisations have not been rolled out yet are shown below:

Summary report @ 00:02:16(+0100) 2019-09-28

Scenarios launched: 50

Scenarios completed: 50

Requests completed: 500

RPS sent: 23.19

Request latency:

min: 42.7

max: 20448.5

median: 62.9

p95: 10630.5

p99: 11442.1

Scenario counts:

0: 50 (100%)

Codes:

200: 500

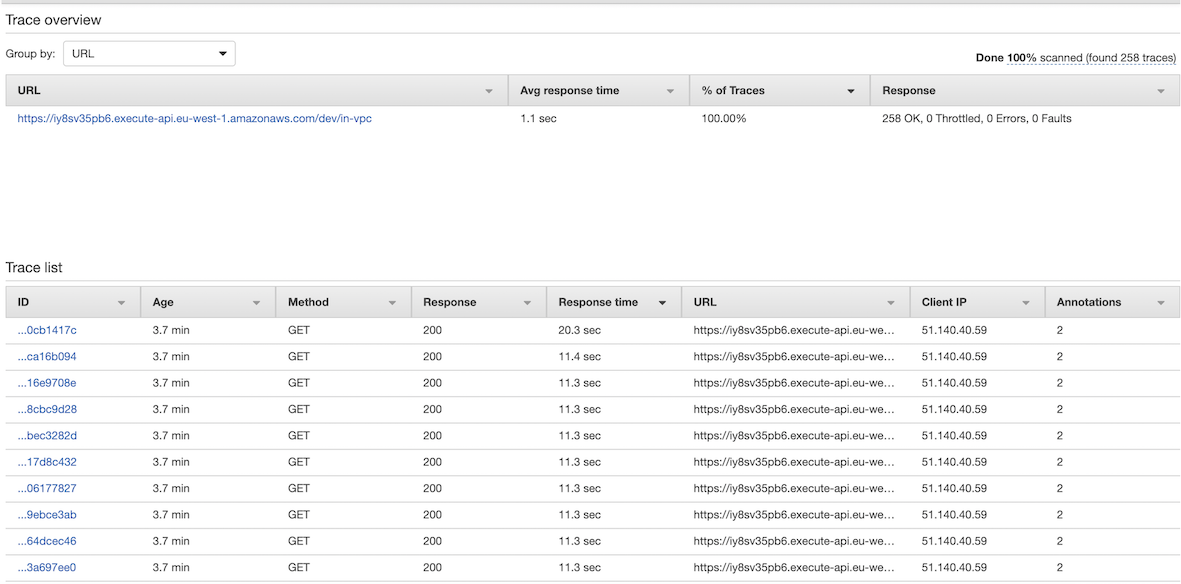

The p95 duration took over 10 seconds, and you could see number of these 10+ second response times in the x-ray trace overviews:

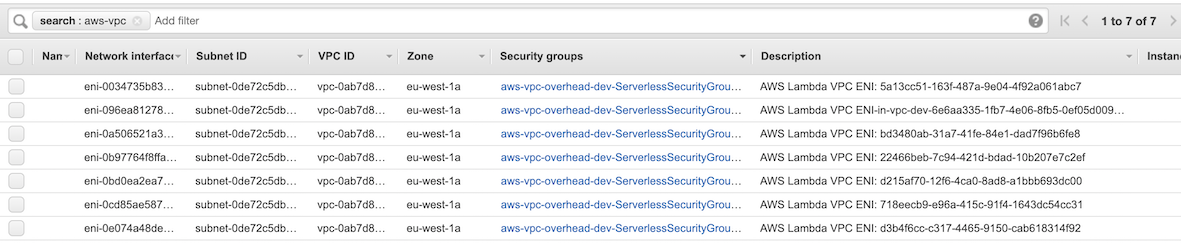

A big rationale for this is the number of new network interfaces that needed to be created and attached:

How did this compare to testing the VPC attached lambda in the EU (Frankfurt) region?

The difference was incredible. The output from the same test run in artillery is shown below:

Summary report @ 00:01:10(+0100) 2019-09-28

Scenarios launched: 50

Scenarios completed: 50

Requests completed: 500

RPS sent: 203.25

Request latency:

min: 55.8

max: 608.2

median: 110.1

p95: 475.3

p99: 570.2

Scenario counts:

0: 50 (100%)

Codes:

200: 500

That is an amazing decrease in the p95 latency of > 95%

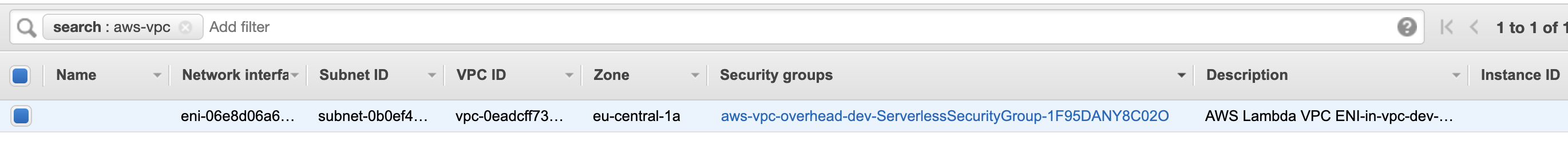

The blog post talked about the network interface creation taking place at the Lambda function creation time. This network interface in the VPC is mapped to the Hyperplane ENI which can scale to support large numbers of concurrent connections. A quick check confirms that after the optimisation, this test only required a single ENI which was created when the Lambda function was deployed.

So there you have it. Excessive cold start times removed with one improvement. Hugely impressive and congratulations to everyone involved in this.